Forget Chatbots, The Future is Autonomous Agents

And you get an AI assistant! And you get an AI assistant!

Sci-fi has been predicting autonomous systems for decades. One example is Tony Stark’s J.A.R.V.I.S. from the Avengers:

Tony Stark: "J.A.R.V.I.S., are you up?"

J.A.R.V.I.S: "For you sir, always."

Tony: "I'd like to open a new project file, index as: Mark II."

J: "Shall I store this on the Stark Industries' central database?"

Tony: "I don't know who to trust right now. 'Til further notice, why don't we just keep everything on my private server."

J: "Working on a secret project, are we, sir?"

Tony: "I don't want this winding up in the wrong hands. Maybe in mine, it could actually do some good."

This is an example of an autonomous agent1 - a ChatGPT-like AI that can take action in the digital and real world on behalf of the user.

In 2011, it seemed very far off. Today, we’re almost there.

Assume you want to have an AI book a flight. Fortunately, an AI like ChatGPT already “understands” the concepts of airlines, tickets, destinations, etc. It can parse your intent if ask, “Find flights from Portland to Chicago. Optimize for price but filter out departure times that are super early or late.”

Even though it can understand what you’re asking, to actually do it, it needs two new things. First, it needs a virtual keyboard and mouse so that it can interact with the airline websites. Second, it would need to understand user interfaces built for humans.

For the AI to acquire these capabilities, it is trained with “behavioral cloning and reinforcement learning”.

Basically, the AI watches videos of these actions being performed. It then tries to accomplish the task and its performance is rated. Through iteration, it learns. It doesn’t need to be trained on every airline site. The sites are similar enough that it can extrapolate from enough training examples, much like a human can. And it likely won’t break if a given airline changes its user interface.

Normally, if a computer were to do an automated task for you, it would communicate with other software systems through “Application Programming Interfaces” - APIs. This is how, for example, Expedia can talk to all the airlines and report back on flight options. It’s using a special computer-to-computer interface that typically requires programming, “middleware”, or, at a minimum, tedious “low-code” solutions.

The coming autonomous agents, however, will be able to navigate the UI by “seeing” the screen pixels or examining the page content.2 They will navigate the web site just like you or me.

For the physical world (i.e., the World of Atoms) an AI can likewise be trained on videos of an action being performed. Once trained, the AI can control a robotic arm to perform the same task.3

You, as the human, then control the AI the same way you control ChatGPT. You simply ask it to do something in plain English (or your language of choice).

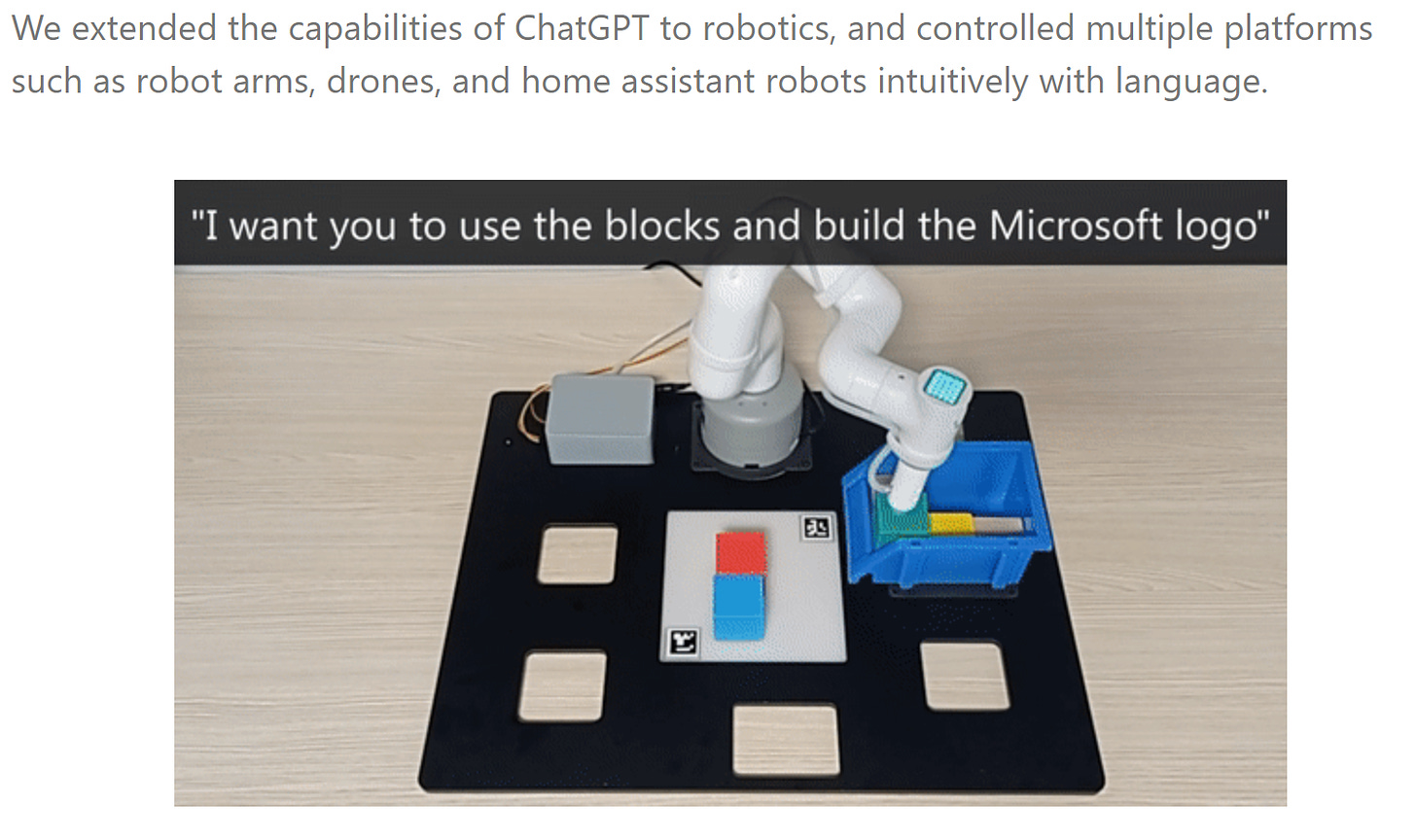

It’s happening today. Microsoft reports hooking ChatGPT up to drones, robotic arms, and home assistant robots.4

The End Game

The best and brightest in AI are working on autonomous agents and describe it as "the final frontier” for chat models.5 If it comes to fruition, everyone gets their own J.A.R.V.I.S., and the user interface for software will need to be fundamentally rethought, because you may primarily interact with it through chat rather than icons, boxes, and buttons.

There’s opportunity in consumer applications like booking flights, but the real money may be in automating business software. Business applications are often powerful, but that power can come with byzantine user interfaces that require long sequences of steps to accomplish even simple tasks. A key company to watch in this space is Adept6 - who claims they’ve cracked the code on automating any business software.

In the future, people may be able to interact with business software as follows:

Human: The Gummy Bear Clothing Company got back to me and they’re ready for a proposal.

AI: Excellent. Shall I move them to the “Proposal Needed” stage in Salesforce?

Human: Yes, and can you listen to the call recording and stub out a proposal?

AI: Of course. Is it the recording the one from 10AM with Ernie Jambdowzingale?

Human: That’s the one.

AI: I’ll review the recording and have a preliminary proposal ready in a few minutes.

We probably spend 20% of our time deciding what to do, and 80% of our time clicking and typing to actually do it. Autonomous assistants promise to take a big bite out of that 80%.

The Near Future of AI is Action Driven - https://jmcdonnell.substack.com/p/the-near-future-of-ai-is-action-driven

AI learns to operate in Minecraft - https://minedojo.org/

AI learns to control robotic arm from watching video - https://jimfan.me/

Microsoft connects ChatGPT to robots - https://www.microsoft.com/en-us/research/group/autonomous-systems-group-robotics/articles/chatgpt-for-robotics/

The people working at the forefront of autonomous systems and the hints they’re dropping - https://www.marketingaiinstitute.com/blog/world-of-bits

Adept aims to build AI that can automate any software process - https://techcrunch.com/2022/04/26/2304039/